The beamline on Jefferson Lab’s Continuous Electron Beam Accelerator Facility is monitored in the Machine Control Center during a Jefferson Lab campus tour. (Jefferson Lab photo/Aileen Devlin)

Machine learning project aims to improve accelerator performance by streamlining beam-tuning tasks

NEWPORT NEWS, VA – When racing through the streets of Tokyo – on a sanctioned road course, that is – a little drift can be a good thing.

But inside the primary particle accelerator at the U.S. Department of Energy’s Thomas Jefferson National Accelerator Facility, even the tiniest drift of the electron beam can bring scientific research to a screeching halt. It also can make operators in the accelerator’s control room furious. Fast.

“Beam tuning can be a maddening process,” said Chris Tennant, a Jefferson Lab staff scientist in the Center for Advanced Studies of Accelerators. “It’s very iterative. It is brute force and a lot of trial and error. It is turning knobs and seeing what effect that has.”

The Continuous Electron Beam Accelerator Facility, a world-class DOE User Facility, is a ⅞-mile particle “racetrack” relied upon by more than 1,800 scientists who study the nature of the universe at subatomic scales.

To provide that level of physics-quality research, CEBAF’s particle beam needs to be tuned just right – and often. But doing so is one of the largest contributors to accelerator downtime.

This led Tennant to assemble a team of accelerator and computer scientists from Jefferson Lab and the University of Virginia. Their mission: Apply an adaptive, self-teaching computer model – aka machine learning – to streamline CEBAF beam tuning.

“I felt there is a more efficient way of doing this,” Tennant said, “a data-driven way.”

The project first got traction in 2021 under Jefferson Lab’s Laboratory Directed Research and Development program. Now, the DOE has given it a green flag in the form of a $1 million grant over the next two years.

‘Deep’ roots

Tennant’s study is the sequel to another machine learning project, also involving CEBAF operations – but not about drift.

Because of the enormous resources required to operate CEBAF, scientific research using its souped-up electron beam is limited to several operational periods each year. So, during every precious experimental run, CEBAF needs to perform at its best.

The electron beam is fueled by 418 superconducting radiofrequency (SRF) cavities, which juice the particles up to 12 GeV of energy. But a glitch in just one of those components can interrupt beam delivery, slamming the brakes on scientific research.

The problem sparked Tennant’s first exploration of machine learning in accelerator science, in late 2019. Under the guidance of former Staff Scientist Anna Shabalina, Tennant and a team of accelerator experts built a custom-made machine learning system that could identify glitches in CEBAF’s many SRF cavities. The goal was to more quickly recover CEBAF from those faults.

Shabalina’s proposal was funded by Jefferson Lab’s LDRD, and the system was installed and tested during CEBAF operations in March 2020. The project was later selected for a $1.35 million DOE grant, allowing Tennant to pick up where Shabalina left off.

“The cavity fault classification project was a great starter,” Tennant said. “You can train a model to identify the fault based on its signature so that someone doesn't have to go through hundreds of plots by hand and do that work. And that was pretty effective.”

In late 2021, Tennant built on that foundation and began developing another machine learning system. The latest installment involves tuning the accelerator’s injector beamline with the help of “deep learning.”

The project is in collaboration with a UVA team led by Assistant Professor Jundong Li and graduate student Song Wang, who provide expertise in artificial intelligence (AI), machine learning and data mining.

“Within the last six years or so, there's been a real boom in using AI in accelerator physics,” Tennant said. “There’s a lot of work being done at various laboratories, but it’s still very young.”

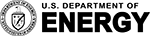

Illustration depicting an arbitrary accelerator beamline (top) and one possible way to construct a corresponding graph representation (bottom). Here the nodes represent individual elements, the node features correspond to appropriate element parameters, a user-specified window of 2 elements defines edges between nodes, and the edge weights correspond to the inverse distance between elements. The edges are directed to capture the fact that an element cannot influence upstream elements of the beamline.

Forging a new ‘graph’

Born from the global pursuit of AI, machine learning involves self-teaching computer systems that can solve problems with relatively little human input.

One form in particular, deep learning, uses an artificial neural network to teach itself tasks that would otherwise be too costly or labor-intensive for humans. It has applications across a broad swath of industries and lately has made great strides in natural language processing and computer vision.

The idea behind Tennant’s latest proposal involves representing CEBAF’s injector beamline as a graph. But these aren’t the type of graphs you’ll find in a basic math textbook.

“Graphs are being used all over science right now,” Tennant said. “They’re used to model molecules. They’re used to model social networks. They’re a framework that captures complex relationships within data. This encompasses many interesting applications, and an accelerator beamline is one of them.”

Think of any one of CEBAF’s SRF cavities or its 2,200-plus magnets as a node on the graph. The temperature inside the CEBAF tunnel or even the humidity outside could also be represented as a node. How the nodes are connected by edges encodes the relationships between them and results in a highly complex graph. That graph can be used to describe the status of CEBAF’s beamline at a specific point in time.

Tennant’s deep learning model leverages a graph neural network (GNN) to boil down that data into an information-rich form that can be visualized in two dimensions – a much more digestible form for an accelerator operator. Trained by self-supervised and supervised learning, the GNN can then mine years of archived CEBAF data to identify clusters of “good” and “bad” space for the injector’s setups.

The data-driven approach could help a CEBAF operator tune the beam injection in real time, as opposed to the more laborious process of interacting with a high-fidelity simulation to provide guidance. The GNN would provide the CEBAF operator immediate visual feedback about whether changes to the injector setup are sliding the system in the right direction.

An example of a 2D visualization where each marker represents a low-dimensional embedding of a complex graph (left), the result of clustering analysis and using a label for the “goodness of state” in CEBAF (middle), and using the results to guide beam tuning in a control room setting (right).

What’s next

Tennant’s team has already laid much of the groundwork for the project. The core objectives for the next round of funding include mining and preparing more accelerator data, further training the deep learning model, fine-tuning the low-dimensional visualization of the beam setups, and applying the framework to CEBAF operations.

Then, there’s the matter of explainability – a hot topic in AI.

As machine learning becomes more prevalent in everyday life, it’s being used for decision-making in some high-stakes situations, Tennant said. This makes transparency in that process all the more important.

“The idea is that you want to peer into the so-called ‘black box’ of AI to give you some human understanding,” Tennant said. “If you're going to make decisions in a control room based on an AI, you would like to have some understanding of what's going on.”

If Tennant and his team succeed, they’ll deliver a user-friendly software framework that can be deployed in the CEBAF control room, respond in a timely fashion to changes in the machine, and maintain high performance over time.

“The hope is that it results in more efficient beam tuning, which in turn means more up time for users,” Tennant said. “That's a pretty bold goal.”

Further Reading

A Gentle Introduction to Graph Neural Networks (distill.pub)

AI Projects at Jefferson Lab

Machine Learning Improves Particle Accelerator Diagnostics

Improving Particle Accelerators with Machine Learning

DOE Funding Boosts Artificial Intelligence Research at Jefferson Lab

Technical Paper: Superconducting radio-frequency cavity fault classification using machine learning at Jefferson Laboratory, Physical Review Accelerators and Beams

By Matt Cahill

Contact: Matt Cahill, Jefferson Lab Communications Office, cahill@jlab.org